kjakx が 2022年09月25日18時52分27秒 に編集

初版

タイトルの変更

SPRESENSEでノイズ除去マイクを作りたかった

タグの変更

SPRESENSE

メイン画像の変更

記事種類の変更

製作品

本文の変更

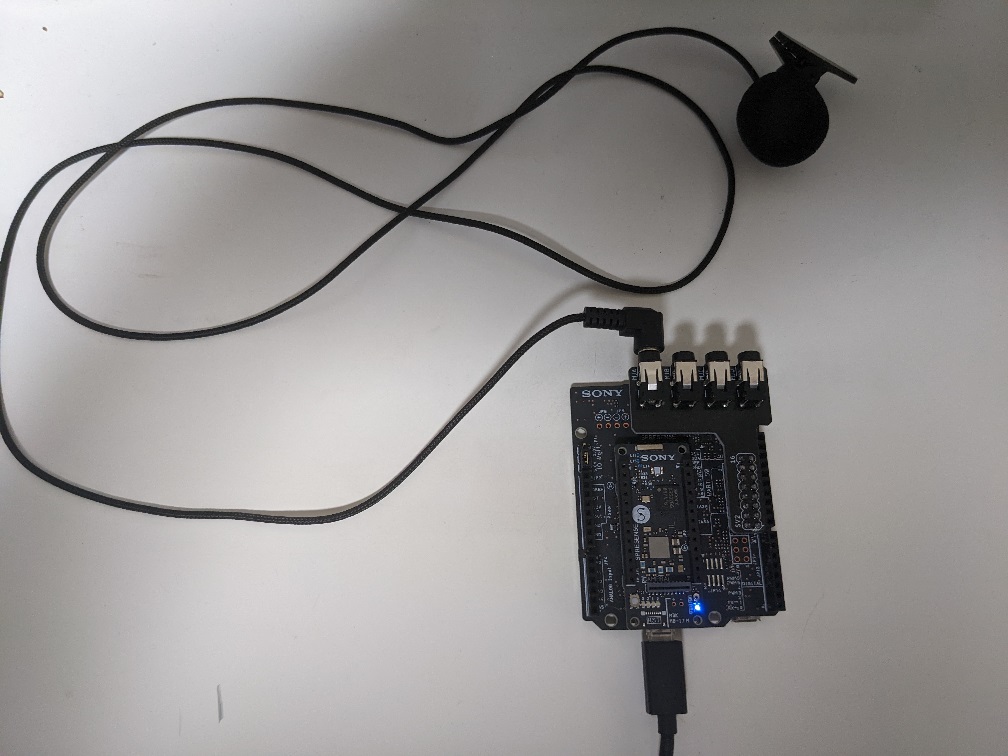

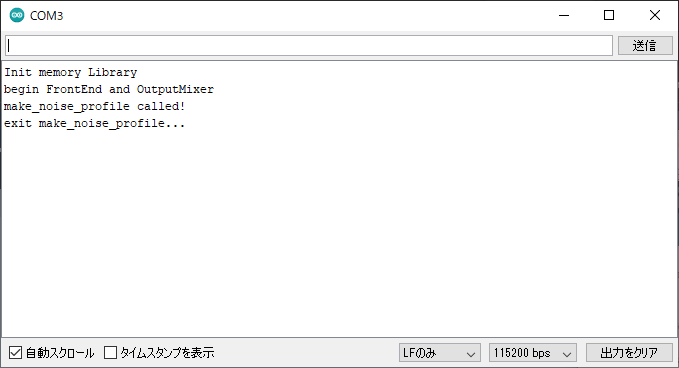

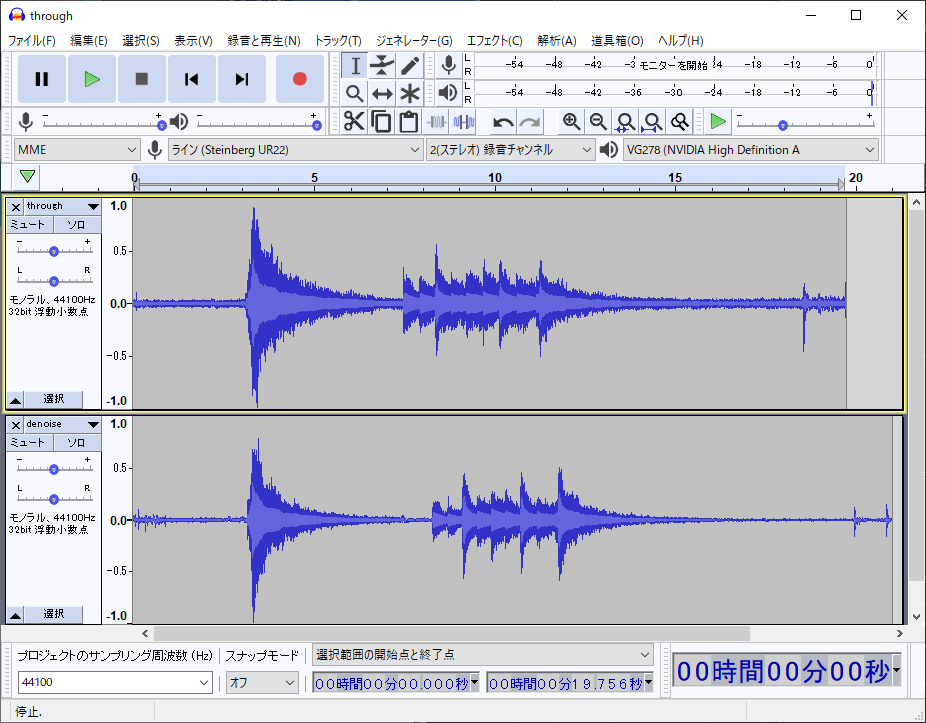

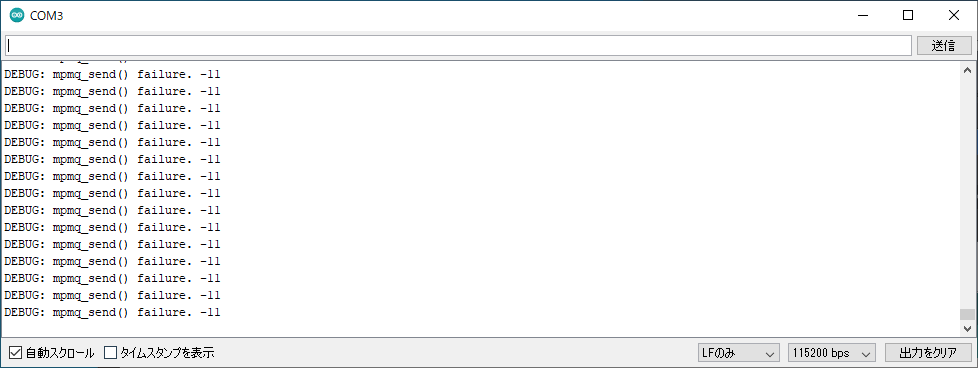

# SPRESENSEでノイズ除去マイクを作りたかった ## 背景 最近、コロナで家にいる時間が増えたので、ギターを練習しています。 部屋でギターを録音すると環境ノイズを拾ってしまうので、ノイズ除去機能付きのマイクを作ってみました。 ## 部品 - SPRESENSE メインボード - SPRESENSE 拡張ボード - [Mic&LCD KIT for SPRESENSE](https://akizukidenshi.com/catalog/g/gM-16589/) ## 設計図 写真のようにパーツを接続します。今回はLCDは不要なので使いません。  ## ソースコード 今回はSpectral Subtractionという手法を採用しました。 背景ノイズの振幅スペクトルを取得して、音声の振幅スペクトルから引き算することでノイズを除去しようという手法です。 SPRESENSEスケッチ例のvoice_effectorをもとに作成しました。 ```arduino:MainAudio.ino /* * MainAudio.ino - FFT Example with Audio (voice changer) * Copyright 2019, 2021 Sony Semiconductor Solutions Corporation * * This library is free software; you can redistribute it and/or * modify it under the terms of the GNU Lesser General Public * License as published by the Free Software Foundation; either * version 2.1 of the License, or (at your option) any later version. * * This library is distributed in the hope that it will be useful, * but WITHOUT ANY WARRANTY; without even the implied warranty of * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU * Lesser General Public License for more details. * * You should have received a copy of the GNU Lesser General Public * License along with this library; if not, write to the Free Software * Foundation, Inc., 51 Franklin St, Fifth Floor, Boston, MA 02110-1301 USA */ #include <MP.h> #include <FrontEnd.h> #include <OutputMixer.h> #include <MemoryUtil.h> #include <arch/board/board.h> FrontEnd *theFrontEnd; OutputMixer *theMixer; /* Setting audio parameters */ static const int32_t channel_num = AS_CHANNEL_MONO; static const int32_t bit_length = AS_BITLENGTH_16; static const int32_t frame_sample = 1024; static const int32_t capture_size = frame_sample * (bit_length / 8) * channel_num; static const int32_t render_size = frame_sample * (bit_length / 8) * 2; /* Multi-core parameters */ const int subcore = 1; struct Request { void *buffer; int sample; }; struct Result { void *buffer; int sample; }; /* Application flags */ bool isCaptured = false; bool isEnd = false; bool ErrEnd = false; /** * @brief Frontend attention callback * * When audio internal error occurc, this function will be called back. */ static void frontend_attention_cb(const ErrorAttentionParam *param) { puts("Attention!"); if (param->error_code >= AS_ATTENTION_CODE_WARNING) { ErrEnd = true; } } /** * @brief OutputMixer attention callback * * When audio internal error occurc, this function will be called back. */ static void mixer_attention_cb(const ErrorAttentionParam *param) { puts("Attention!"); if (param->error_code >= AS_ATTENTION_CODE_WARNING) { ErrEnd = true; } } /** * @brief Frontend done callback procedure * * @param [in] event AsMicFrontendEvent type indicator * @param [in] result Result * @param [in] sub_result Sub result * * @return true on success, false otherwise */ static bool frontend_done_callback(AsMicFrontendEvent ev, uint32_t result, uint32_t sub_result) { UNUSED(ev); UNUSED(result); UNUSED(sub_result); return true; } /** * @brief Mixer done callback procedure * * @param [in] requester_dtq MsgQueId type * @param [in] reply_of MsgType type * @param [in,out] done_param AsOutputMixDoneParam type pointer */ static void outputmixer_done_callback(MsgQueId requester_dtq, MsgType reply_of, AsOutputMixDoneParam* done_param) { UNUSED(requester_dtq); UNUSED(reply_of); UNUSED(done_param); return; } /** * @brief Pcm capture on FrontEnd callback procedure * * @param [in] pcm PCM data structure */ static void frontend_pcm_callback(AsPcmDataParam pcm) { int8_t sndid = 100; /* user-defined msgid */ static Request request; if (pcm.size > capture_size) { puts("Capture size is too big!"); pcm.size = capture_size; } request.buffer = pcm.mh.getPa(); request.sample = pcm.size / (bit_length / 8) / channel_num; if (!pcm.is_valid) { puts("Invalid data !"); memset(request.buffer , 0, request.sample); } MP.Send(sndid, &request, subcore); if (pcm.is_end) { isEnd = true; } return; } /** * @brief Mixer data send callback procedure * * @param [in] identifier Device identifier * @param [in] is_end For normal request give false, for stop request give true */ static void outmixer0_send_callback(int32_t identifier, bool is_end) { /* Do nothing, as the pcm data already sent in the main loop. */ UNUSED(identifier); UNUSED(is_end); return; } static bool send_mixer(Result* res) { AsPcmDataParam pcm_param; /* Alloc MemHandle */ while (pcm_param.mh.allocSeg(S0_REND_PCM_BUF_POOL, render_size) != ERR_OK) { delay(1); } if (isEnd) { pcm_param.is_end = true; isEnd = false; } else { pcm_param.is_end = false; } /* Set PCM parameters */ pcm_param.identifier = OutputMixer0; pcm_param.callback = 0; pcm_param.bit_length = bit_length; pcm_param.size = render_size; pcm_param.sample = frame_sample; pcm_param.is_valid = true; memcpy(pcm_param.mh.getPa(), res->buffer, pcm_param.size); int err = theMixer->sendData(OutputMixer0, outmixer0_send_callback, pcm_param); if (err != OUTPUTMIXER_ECODE_OK) { printf("OutputMixer send error: %d\n", err); return false; } return true; } /** * @brief Setup Audio & MP objects */ void setup() { int8_t rcvid = 0; /* Initialize serial */ Serial.begin(115200); while (!Serial); /* Initialize memory pools and message libs */ Serial.println("Init memory Library"); initMemoryPools(); createStaticPools(MEM_LAYOUT_RECORDINGPLAYER); /* Begin objects */ theFrontEnd = FrontEnd::getInstance(); theMixer = OutputMixer::getInstance(); theFrontEnd->begin(frontend_attention_cb); theMixer->begin(); puts("begin FrontEnd and OutputMixer"); /* Create Objects */ theMixer->create(mixer_attention_cb); /* Set capture clock */ theFrontEnd->setCapturingClkMode(FRONTEND_CAPCLK_NORMAL); /* Activate objects */ theFrontEnd->activate(frontend_done_callback); theMixer->activate(OutputMixer0, outputmixer_done_callback); usleep(100 * 1000); /* waiting for Mic startup */ /* Initialize each objects */ AsDataDest dst; dst.cb = frontend_pcm_callback; theFrontEnd->init(channel_num, bit_length, frame_sample, AsDataPathCallback, dst); /* Set rendering volume */ theMixer->setVolume(0, 0, 0); /* Unmute */ board_external_amp_mute_control(false); theFrontEnd->start(); /* Launch SubCore */ int ret = MP.begin(subcore); if (ret < 0) { printf("MP.begin error = %d\n", ret); } /* receive with non-blocking */ // MP.RecvTimeout(20); } /** * @brief Audio loop */ void loop() { int8_t rcvid = 0; static Result* result; /* Receive sound from SubCore */ int ret = MP.Recv(&rcvid, &result, subcore); if (ret < 0) { printf("MP error! %d\n", ret); return; } if (result->sample != frame_sample) { printf("Size miss match.%d,%ld\n", result->sample, frame_sample); goto exitCapturing; } if (!send_mixer(result)) { printf("Rendering error!\n"); goto exitCapturing; } /* This sleep is adjusted by the time to write the audio stream file. * Please adjust in according with the processing contents * being processed at the same time by Application. * * The usleep() function suspends execution of the calling thread for usec microseconds. * But the timer resolution depends on the OS system tick time * which is 10 milliseconds (10,000 microseconds) by default. * Therefore, it will sleep for a longer time than the time requested here. */ // usleep(10 * 1000); return; exitCapturing: board_external_amp_mute_control(true); theFrontEnd->deactivate(); theMixer->deactivate(OutputMixer0); theFrontEnd->end(); theMixer->end(); puts("Exit."); exit(1); } ``` ```arduino:SubNoise.ino /* * SubFFT.ino - FFT Example with Audio (voice changer) * Copyright 2019 Sony Semiconductor Solutions Corporation * * This library is free software; you can redistribute it and/or * modify it under the terms of the GNU Lesser General Public * License as published by the Free Software Foundation; either * version 2.1 of the License, or (at your option) any later version. * * This library is distributed in the hope that it will be useful, * but WITHOUT ANY WARRANTY; without even the implied warranty of * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU * Lesser General Public License for more details. * * You should have received a copy of the GNU Lesser General Public * License along with this library; if not, write to the Free Software * Foundation, Inc., 51 Franklin St, Fifth Floor, Boston, MA 02110-1301 USA */ #include <MP.h> #include <math.h> #include "FFT.h" /*-----------------------------------------------------------------*/ /* * FFT parameters */ /* Select FFT length */ //#define OVERLAP 0 //#define FFT_LEN 32 //#define FFT_LEN 64 //#define FFT_LEN 128 //#define FFT_LEN 256 //#define FFT_LEN 512 #define FFT_LEN 1024 //#define FFT_LEN 2048 //#define FFT_LEN 4096 /* Number of channels*/ #define MAX_CHANNEL_NUM 1 //#define MAX_CHANNEL_NUM 2 //#define MAX_CHANNEL_NUM 4 /* Parameters */ const int g_channel = MAX_CHANNEL_NUM; /* Number of channels */ const int g_result_size = 8; /* Result buffer size */ FFTClass<MAX_CHANNEL_NUM, FFT_LEN> FFT; arm_rfft_fast_instance_f32 iS; /* Allocate the larger heap size than default */ USER_HEAP_SIZE(64 * 1024); /* MultiCore definitions */ struct Request { void *buffer; int sample; }; struct Result { void *buffer; int sample; }; static float meanMagnNoise[FFT_LEN / 2] = {0.0}; void magn_from_fft(float* fft, float* magn) { arm_cmplx_mag_f32(fft, magn, FFT_LEN); } void phase_from_fft(float* fft, float* phase) { for (int i = 0; i < FFT_LEN / 2; i++) { phase[i] = atan2(fft[2 * i + 1], fft[2 * i]); } } void subtract_noise(float* magnIn, float* magnOut) { static float noiseSubtracted[FFT_LEN / 2]; arm_sub_f32(magnIn, meanMagnNoise, noiseSubtracted, FFT_LEN / 2); // subtract noise spectrum arm_clip_f32(noiseSubtracted, magnOut, 0.0, FLT_MAX, FFT_LEN / 2); // negative value to zero } void fft_from_magn_phase(float* magn, float* phase, float* fft) { for (int i = 0; i < FFT_LEN / 2; i++) { fft[2 * i] = magn[i] * arm_cos_f32(phase[i]); fft[2 * i + 1] = magn[i] * arm_sin_f32(phase[i]); } } void make_noise_profile(unsigned long timespan) { printf("make_noise_profile called!\n"); int ret; int8_t rcvid; Request *request; int cnt_noise_add = 0; float magnTmp[FFT_LEN / 2]; // collect noise profile while timespan bool time_limit_exceed = false; unsigned long start = millis(); while (!time_limit_exceed) { unsigned long now = millis(); if (now-start > timespan) time_limit_exceed = true; ret = MP.Recv(&rcvid, &request); if (ret >= 0) { FFT.put((q15_t*)request->buffer,request->sample); } while (!FFT.empty(0)) { int cnt = FFT.get(magnTmp,0); for (int i = 0; i < FFT_LEN / 2; i++) { meanMagnNoise[i] += magnTmp[i]; } cnt_noise_add += 1; } } // calculate noise spectrum mean for (int i = 0; i < FFT_LEN / 2; i++) { meanMagnNoise[i] /= (float)cnt_noise_add; } printf("exit make_noise_profile...\n"); } void setup() { Serial.println("subcore setup"); int ret = 0; int sndid = 10; /* Initialize MP library */ ret = MP.begin(); if (ret < 0) { errorLoop(2); } /* receive with non-blocking */ MP.RecvTimeout(100000); /* begin FFT */ FFT.begin(WindowRectangle,MAX_CHANNEL_NUM,0); arm_rfft_1024_fast_init_f32(&iS); // for IFFT // make noise profile make_noise_profile(3000); } void loop() { int ret; int8_t sndid = 10; /* user-defined msgid */ int8_t rcvid; Request *request; static Result result[g_result_size]; static float fftIn[FFT_LEN]; static float magnIn[FFT_LEN / 2]; static float phaseIn[FFT_LEN / 2]; static float magnDenoised[FFT_LEN / 2]; static float fftOut[FFT_LEN]; static float wavOut[FFT_LEN]; //static q15_t wavOverlap_q15[OVERLAP] = {0}; static q15_t wavOut_q15[FFT_LEN]; static q15_t bufOut[g_result_size][FFT_LEN * 2]; // stereo static int pos = 0; /* Receive PCM captured buffer from MainCore */ ret = MP.Recv(&rcvid, &request); if (ret >= 0) { FFT.put((q15_t*)request->buffer,request->sample); } while (!FFT.empty(0)) { // 1. Perform FFT for current frame int cnt = FFT.get_raw(fftIn,0); // 2. get magnitude and phase from fft result magn_from_fft(fftIn, magnIn); phase_from_fft(fftIn, phaseIn); // 3. subtract noise magnitude from magnitude of input subtract_noise(magnIn, magnDenoised); // 4. convert denoised magnitude and original phase to fft result fft_from_magn_phase(magnDenoised, phaseIn, fftOut); // 5. IFFT arm_rfft_fast_f32(&iS, fftOut, wavOut, 1); // 6. Send result to main core arm_float_to_q15(wavOut, wavOut_q15, FFT_LEN); for(int j = 0; j < cnt; j++) { bufOut[pos][j * 2] = wavOut_q15[j]; bufOut[pos][j * 2 + 1] = 0; } result[pos].buffer = (void*)MP.Virt2Phys(&bufOut[pos][0]); result[pos].sample = cnt; ret = MP.Send(sndid, &result[pos], 0); pos = (pos + 1) % g_result_size; if (ret < 0) { errorLoop(11); } } } void errorLoop(int num) { int i; while (1) { for (i = 0; i < num; i++) { ledOn(LED0); delay(300); ledOff(LED0); delay(300); } delay(1000); } } ``` ## 使い方 起動後、シリアルモニタに以下のような表示が出ます。 "make_noise_profile called!"の表示から3秒間、背景ノイズの振幅スペクトルを記録するので静かにします。 "exit make_noise_profile..."が表示されたら準備完了です。  ## 結果 今回作成したマイクを通した音とパススルーの音(SPRESENSEスケッチ例のthroughを使用)を聞き比べてみました。 SPRESENSEのヘッドホン端子からオーディオインターフェースのLINE INに接続してDAWで録音しました。 throughの方は自動車の走行音などの環境ノイズが入ってしまっていますが、denoiseの方はある程度環境ノイズは抑えられているように聞こえます。 @[twitter](https://twitter.com/k_jak_x/status/1573963497519521793) 波形を見ても、ノイズの振幅は抑えられているように見えます(上がthrough、下がノイズ除去)。  ## 問題点 環境ノイズは抑えられましたが、低音域が失われており、また高音域にプチプチとノイズが入っています。 今回のコードはFFTの条件として矩形窓を使用し、オーバーラップ無しにしているので、それが一因かもしれません(オーバーラップ処理まで実装しようとしましたが、原因不明のエラーが解消せず断念しました)。 また、環境ノイズは低周波音が多いので、本手法によって低音域がなくなっているのは当然と言えます。 あと、しばらく動かしていると以下のようなエラーが出て録音できなくなります。原因はよくわかりません。  ## まとめ SPRESENSEでノイズ除去マイクを作りましたが、逆にノイズが入ってしまう結果となりました。 製作過程でSPRESENSEのライブラリのソースを読んだり、ARMのCMSIS DSPライブラリを使用したりと、とても勉強になりました。 Neural Network Consoleを使ったAutoEncoderによるノイズ処理も試しているので、うまくいったら投稿します。